Attention-based Isolated Gesture Recognition with Multi-task Learning

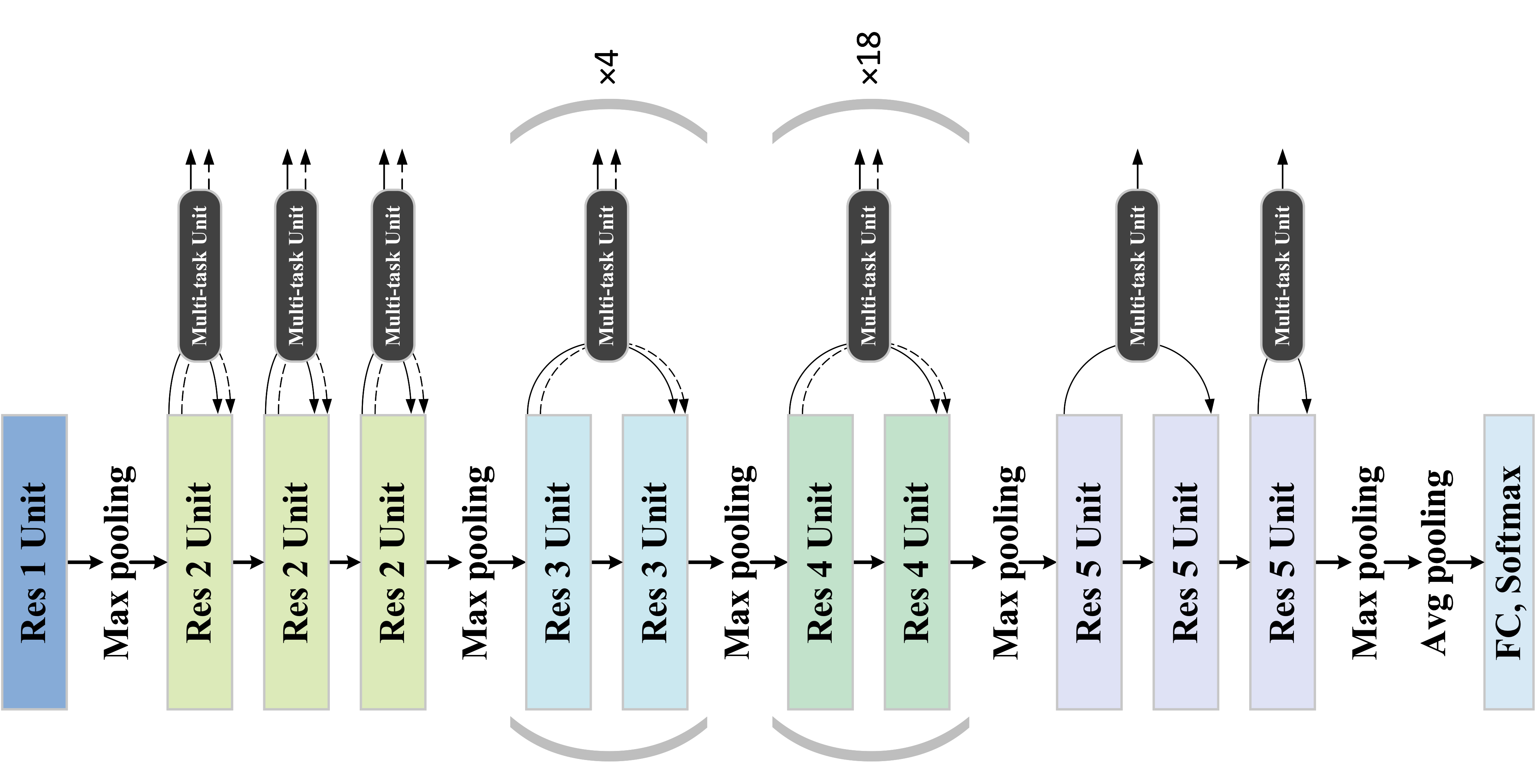

The proposed feature extraction network architecture.

The proposed feature extraction network architecture.

Abstract

For deaf-mute people, sign language is the main way to communicate inside their group and with the outside world. Automatic sign language recognition system can bridge the communication between deaf-mute people and hearing people, improve the quality of life of deaf-mute people, and has important scientific research value and social impact. With the extensive study of deep learning technology, convolutional neural networks were introduced into this field and the performance of sign language recognition has been significantly improved. Existing deep learning sign language recognition frameworks generally need to detect and locate key areas such as hand and face, and reduce the influence of background and other body regions on recognition. However, this kind of methods which break down detection and recognition into several modules, requires independent training for different modules and more preprocessing steps, is relatively difficult, and cannot effectively share the feature information of region recognition and sign language classification. Therefore, we propose to combine the identification of key temporal and spatial regions and sign language classification into one deep learning network architecture, using attention mechanisms to focus on effective features, and realizing end-to-end automatic sign language recognition. Our proposed architecture can enhance the efficiency of sign language recognition and maintain a comparable recognition accuracy with state-of-art work.